There are few topics in software engineering that evoke as much passion and strong opinions as testing. This article is neither an introduction to the topic nor a guide or tutorial. What we will cover here is the mindset and general approach that Lemma teams follow to test the code we write.

High-level mindset

Every change made to the product needs to have sufficient high-quality automated test coverage.

Manual testing is the last line of defense, and it is the least effective and most expensive way to ensure correctness and stability.

Manual testing is reserved primarily as a tool within the QA process toolkit to conduct exploratory testing to validate acceptance criteria and also for more subtle, nuanced or less “automatable” aspects of the work, such as evaluating the quality of how intuitive or enjoyable a newly implemented feature is.

We keep this mindset purposely abstract because we believe that the implementation of these objectives has to be designed and evaluated for the parameters of each codebase or project.

Ultimately, the combination of testing approaches, methods or tools to be leveraged may change significantly from project to project, but in all cases our objectives for test coverage remain the same, as described below.

Tests Coverage

All modules or components of the team’s codebase (including those from network services and imported packages) need tests that verify behaviors covering the acceptance criteria for that feature, including the “happy path” plus edge cases, different data states, error handling, etc.

Each possible way to access the feature, with different data, different user roles or permissions, different integration points, all of these things may affect the behaviour of the code in subtle but crucial ways.

Those tests might be implemented as unit tests, functional tests, integration or even end-to-end tests. The specific testing approach has to be carefully considered and decided by the team.

Enhancements are expected to have extensive tests. Similarly, bug fixes are expected to include regression tests to ensure that the same bug (or rather the same category of bugs) does not manifest again in the future.

Teams at Lemma typically maintain a very high test coverage percentage metric, but this is not an arbitrary static number; this is instead achieved as a natural corollary or side effect of our number one priority and KPI:

The combined automated tests for a feature (unit, functional, integration and end-to-end) provide the engineering team with a high degree of confidence of its continued correctness, performance and resilience.

This objective usually results in an 80% or more test coverage, but we believe that metric alone isn’t sufficient as an indicator or something worth pursuing in and of itself, and obviously it doesn’t say anything about the quality or effectiveness of the tests implemented. Ultimately, the combination of testing approaches, methodologies, selected use cases and scenarios, tools and everything else combined with the quality in execution of each test are what truly determines the resulting level of confidence earned by the tests suite.

Types of Tests

Unit Tests

Unit tests validate that given an initial state, a specific part of the application, in isolation from all others, will behave as expected. Unit tests can be broken down into 3 main stages, commonly referred to as “AAA”:

Arrange: Initializing an encapsulated and isolated part of the application with an initial state and conditions.

Act: Triggering a series of events or changes for which one expects to see the application behave in a specific way.

Assert: Observing the resulting behaviour or output and determining if it matches one’s expectations or not.

Writing Code that Is Easy to Test

This requires following the Engineering Standards & Best Practices.

Lemma teams write code that’s deterministic and when possible, idempotent. This means avoiding writing code that relies on the time of the day or other external systems. This can typically be avoided through the use of dependency-injection, as explained in Managing Software Complexity.

The code is written in atomic increments, easily divisible into small “units” that make the tests naturally correspond to specific parts of the code. This can usually only happen if one follows the Single Responsibility Principle, also referenced in the Engineering Standards & Best Practices.

Teams should avoid writing code with side effects. Such code can be just as hard (sometimes impossible) to test as non-deterministic code. An example of such code would be a function which when called, sometimes updates a cache based on business logic that’s captured somewhere else in the application.

Teams should avoid global variables or other things external to the unit of software they are testing that dictate how it behaves. For example, having a global variable for whether to use “celsius” or “fahrenheit.”

Lemma teams rely on defensive software design, not defensive coding. The team is clear about the expected use cases or inputs that their unit of software is expected to handle, and don’t preemptively and defensively program assertions within the unit itself to protect against misuse or breaking of those expectations. Instead, the team designs their software so the integration points guarantee adherence to those expectations.

When the team wants to define guards against misuse, they recognize that as a responsibility in and of itself and design it as its own unit of software that can be tested in isolation. A typical example of that is a FormValidation object.

Properties of Good Unit Tests

Thorough: tests should cover all of the important inputs (in terms of state and use cases or events) and validate all expected outputs or behaviors.

Specific: Multiple small, atomic tests, not one big test that validates multiple scenarios at once. When a test fails, the team is able to easily pinpoint the specific behavior that is not meeting their expectations.

Readable: The “Arrange” part of the test clearly describes the scenario being tested, a snapshot of the world at a moment in time in which the team wants to see how their application behaves. The “Act” part clearly tells the story of what event is going to unfold that would trigger the unit of software to behave in a certain way. The “Assert” part clearly describes the behavior that the team expects to see. All together, the narrative of the test is obvious and natural to the reader.

Fast: Paying close attention to the performance of the tests is a key requirement for operational efficiency of the team. The amount of time waiting for tests to run compounds over time and number of team members and if left unchecked, it can become a huge impediment to rely on the tests suite as often and thoroughly as needed.

Unit Tests Are Not Integration Tests

Teams use unit tests to focus on simple events (e.g.: a specific function being called) rather than validate complex scenarios (e.g.: a very complex set of state conditions).

When the event being triggered is not simple, the team ends up with unit tests that are effectively testing for side effects as described above (interactions that reach into databases, the filesystem or other external APIs).

This doesn’t mean that the test is bad automatically, but it does mean that it has now become an integration test. There are specific tradeoffs that come with unit tests vs integration tests and if one doesn’t understand these clearly, they may have an inaccurate representation of what the tests are in fact validating.

Integration Tests

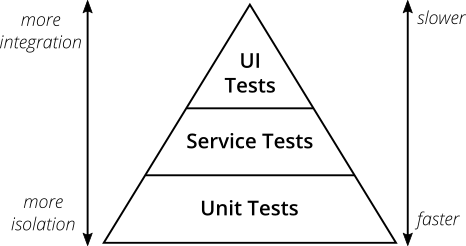

While unit tests focus on the specific behaviors of each atomic unit of the application, Integration Tests (also sometimes referred as Service Tests) instead focus on how high level components or systems interact.

As described in the testing pyramid above, these tests are necessarily slower to run, as they require a significantly bigger instantiation of the application and things become harder to isolate.

Integration Tests can both apply to testing how 2 modules interact with each other as well as something much higher level, like testing that an API endpoint behaves as expected in all cases.

The complexity here grows dramatically, as several systems are put to the test at once in order to validate a set of expectations on resulting behavior at an arbitrarily high level of integration vs abstraction.

That said, all of the same considerations for unit tests apply here. Lemma teams ensure that their Integration Tests are:

Thorough: In this case, thorough means that the team invests in defining strategic scenarios that allow them to recreate all of the conditions in which they want to test the system.

For example, the team may test the same feature multiple times, one for each user role (or set of permissions) that users may have which may lead to different behaviors.

Specific: Unlike with Unit Tests, the team may not be able to pinpoint the underlying problem in the code just by a test failing, but they nonetheless are able to determine which behavior or expectation is not being met under a specific scenario.

Readable: Same as with Unit Tests, Integration Tests clearly tell the story of the scenario created, the actions or changes the team is applying in that scenario and their expectations for what happens next.

Fast: The team is even more careful about the performance of their Integration Tests, as they are naturally much more expensive to run. If this goes unchecked, it’s not uncommon for a testing suite to quickly start taking hours to run, rather than minutes or seconds.

This has the potential of dramatically affecting the workflow and attitude of the team towards tests, so it is one of the first items to prioritize in terms of paying technical debt.

End to End Tests

End to end tests are a specific type of Integration tests, in which the level at which the team is testing how systems integrate is the highest possible one, from how a user interacts with a browser all the way to the application reacting to that across the stack and updating the user interface accordingly.

Because of this scope of testing, End to end tests are also commonly referred as UI Tests, since that’s the primary viewport for evaluating the application’s behavior.

These tests are the most expensive, complex and fragile tests possible. Be that as it may, they are also crucial and represent the main strategy for automating QA testing and minimizing one’s reliance on manual testing.

Because of this intent, QA specialists play an important role in working with the rest of the team to define the test scenarios and contribute to their implementation. More on this topic is discussed in our Engineering Quality article.

Testing Methodologies

One last thing… with regard to TDD vs BDD vs ATDD or other methodologies: we believe these all represent nice ways to design software interactively and carefully and as such are good practices to follow. That said, we also believe that ultimately the methodology employed does not have a measurable effect or impact on the outcomes and KPIs we care about, as discussed above.

Each engineer has their own preferences for when and how to write tests. Some really do find it useful to begin by thinking about behaviors to be tested. Other engineers start to draw things on a whiteboard and maybe move to pseudo code before thinking about the tests.

Either way, we believe it’s important to not be prescriptive about specific tools or methodologies and instead our guidelines at Lemma are always about seeking alignment on the litmus tests for success, the things we can look at at the end of the day to know whether we are achieving our service standards or not.